Abstract

At the department level, Agile processes are less descriptive on how to increase delivery excellence across all their teams. One approach is to provide implementation-free constraints/goals that drive teams to better delivery and then encourage a culture of cross team information exchange. The constraints/goals will create direction and incentive for teams throughout the organization, while information exchange at the team level allows new 'best of class' solutions to these constraints/goals to propagate across the organization. By inserting a few organizational standard items into team Working Agreements, using Build Monitors to make quality visible, and learning events such as Book Groups, the organization can create a minimum "bar" across teams that will force some teams to improve their delivery excellence, yet won't get in the way of teams who already have good delivery excellence (yet potentially non-standard though innovative development methods).

Introduction

Most agile methodologies come with a built-in form of information exchange at the team level:

daily standup meeting, retrospectives, sprint demos, pair programing, planning meetings that include the entire team, retrospectives, and code reviews. eXtreme Programming, for example, contains the most extreme form of team viral information sharing--pair programming, which has developers working with each other and improving all their development craftsmanship on a daily basis (including soft skills), but sometimes people can't get along with each other. Other forms are lightweight and helpful, but reduced efficiency--code reviews, for example, help people become better OO programmers (a part of craftsmanship), but the practice increases work-in-progress, creates an additional future integration point (integration of design ideas), discourages code experimentation (might not make it past the code review), and doesn't help people learn how to use their software tools such as the IDE more efficiently.

Although most Agile methodologies come with good information exchange at the team level, finding how to direct efforts across the organizational is rarely discussed. (Scrum has Scrum of Scrum and Meta Scrum. Others practices? Please use the blog comments to add more knowledge if you see something missing.) Here are strategies that I've seen implemented to a good effect across an organization and creates a minimum bar of delivery excellence across all the teams, forcing some teams to discover better ways to deliver code, yet doesn't disrupt teams that already are excellent at delivering code.

Working Agreements

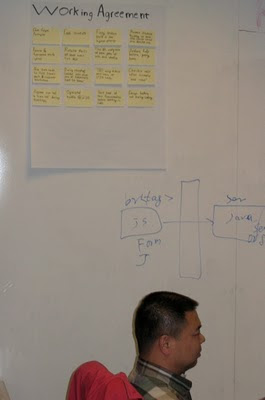

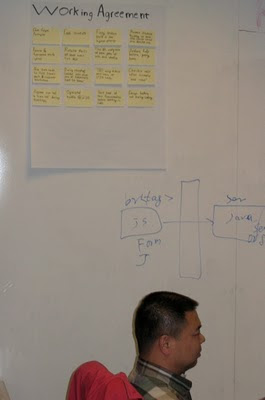

The best working agreements have fewer than eight items and are visible in the team's working area. Here is Fred. Off camera is the rest of his team. Although this working agreement was developed by the team, organizational standards were incorporated without being "too" proscriptive. This way, the team takes ownership of their "implementation" and use their own tools/processes to accomplish the working agreement.

Off camera is the rest of his team. Although this working agreement was developed by the team, organizational standards were incorporated without being "too" proscriptive. This way, the team takes ownership of their "implementation" and use their own tools/processes to accomplish the working agreement.

Team Working Agreement

Teams get a lot of value by having a written agreement on how we work together and handle day to day issues. This agreement often puts into writing the answers to the "typical" questions that non-self directed teams recieved by asking their project manager, "hey, this happened, what should I do?" This working agreement is often referred to by the following names: Team Working Agreement, General Working Agreement, or Daily Working Agreement. The editorial comments in parenthesis aren't explicitly written down on the working agreement, but are understood by the team.

Example:

Team Working Agreement

- Automated tests are added for all discovered production issues before the code is written

- Standups always include a dial-in (for teams that have remote team members/PO)

- solving production fires is top priority

- stop what your doing to address failing automated unit tests

- the state of all automated tests is visible in the team area

- all system tests, unit tests, UI tests are executed by CI

- all unit tests are executed at least daily

- comprehensive test passes are executed before release to live (all automated + manual)

- all code changes are developed using TDD (legacy code and new code)

- QA starts writing system tests on day one of sprint

- everyone is a tester

Organizational Standard

Setting some core standards across all teams about what few items must be in Team Working Agreement works well. Common subset of the above that I've seen in practice are:

Story Definition of Done

This is the team's agreement on what "done" means when they say, "this story is done." All the steps to the story being done must be met to get "credited" with the story being finished so the team can accumulate the story's estimate in Story Points/Units into the team's Velocity. When the team starts a new Definition of Done, the SM needs to be vigilant until the team has got it. If the team can't follow it, then we look for "why not" in the Retrospective and find some way to handle the impediment (fix some problems, adjust definition of done) This definition of done is important for maintaining the team's standard of potentially shippable at the end of each sprint. The editorial in the parenthesis isn't typically added to definitions of done but understood by the team.

Example:

Story Definition of Done

- All public methods are unit tested (except for machine generated code that isn't changed by human hands, or DataValue objects that are "identity--I=I" getter/setters)

- Story functionality is tested with an automated system/UI test (also called acceptance tests, functional tests, ...)

- Manual tests are executed

- Rally is updated (Rally is an electronic story tracking tool)

Organizational Standard

Because a sprint is time boxed, the sprint is finished regardless of the "pass/fail" of the items in the Sprint Definition of Done, but the retrospective should be used to identify the root causes and create actions to solve problems.

Example:

Sprint Definition of Done

- Each sprint, the code coverage increases

- All automated tests are green at the end of the sprint

- All manual tests for the stories are executed

Organizational Core Standard

Other Working Agreements

The following working agreements may also be useful to teams. The art is to find the fewest agreements so we aren't buried by paperwork and yet have the ones that are necessary because problems happen when we don't have them: Task Definition of Done, Release Definition of Done (contains activities to be done before the software goes live, such as performance testing, security testing, or other things that for some reason, there are impediments to getting them done during typical sprints.)

Build Monitors

These devices show the "headlines" of the projects quality status. Anyone can "click" for details. This team modified the continues integration system's CSS to get the clear and simple style they wanted. (Unfortunately, most CI environments have overly complicated dashboards.) The monitor the left has the biz tier (unit tests) and web services tier (system tests) for the SAME app. The build was split into logical levels. The first level compiles, then runs unit tests for the biz tier, and then runs system tests for the web services tier. The monitor on the right is the system tests that test the UI. These three levels are common. It's not hard to imagine adding a SQL level would be useful for a team doing work with stored procedures. The success of one level cascades to the next level. A failure at level one (can't compile) stops it all.

These devices show the "headlines" of the projects quality status. Anyone can "click" for details. This team modified the continues integration system's CSS to get the clear and simple style they wanted. (Unfortunately, most CI environments have overly complicated dashboards.) The monitor the left has the biz tier (unit tests) and web services tier (system tests) for the SAME app. The build was split into logical levels. The first level compiles, then runs unit tests for the biz tier, and then runs system tests for the web services tier. The monitor on the right is the system tests that test the UI. These three levels are common. It's not hard to imagine adding a SQL level would be useful for a team doing work with stored procedures. The success of one level cascades to the next level. A failure at level one (can't compile) stops it all.

Book Groups

Books a great way to get information across many people in an organization. Just buying everyone in your department a book is a weak play (I still haven't read "The HP Way" which was given to me by my manager when I started work at HP). Books are great learning tools if they are actually used. I don't recommend buying people books unless they ask for them or it comes with a plan reading and using the knowledge. Book groups are groups that get together and discuss reading and using the knowledge (or actually demonstrating the knowledge). To encourage the Cross Pollination of Information, find someone to start a cross organizational book group that meets each week over lunch (companies often buy lunch and the books, and if people are meeting about the books). Encourage the group to develop activities to put the knowledge to work: get members to sign up to teach or demonstrate or lead discussion for each chapter of the book. DO-CODE-ON-PROJECTOR with a mob watching is my personal favorite tactic. Books I recommend are: Martin Fowler's Refactoring, Diana Larson's Agile Retrospectives. Teams I've been a part of have done this with books on Refactoring, GOF Design Patterns, eXreme Programing Explained, and Java Concurrency.

Conclusion

Delivering to all your teams core standards for their working agreements creates quality bars that teams aim for and hurdle over. If the organization focuses on the desired outcome and allows the teams to develop how to reach those outcomes, the teams will find solutions that work best for their personal and project makeup, and they'll become vested in the implementation. Adding in collaborative cross-organizational sharing and learning events such as book groups that are driven by employees, will give the employees a process for driving their own development and collaborating/learning with other team members.

Troubleshooting

Here is a troubleshooting guide that covers some common problems that may occur implementing the above activities.

References:

XP practices http://en.wikipedia.org/wiki/Extreme_programming_practices

At the department level, Agile processes are less descriptive on how to increase delivery excellence across all their teams. One approach is to provide implementation-free constraints/goals that drive teams to better delivery and then encourage a culture of cross team information exchange. The constraints/goals will create direction and incentive for teams throughout the organization, while information exchange at the team level allows new 'best of class' solutions to these constraints/goals to propagate across the organization. By inserting a few organizational standard items into team Working Agreements, using Build Monitors to make quality visible, and learning events such as Book Groups, the organization can create a minimum "bar" across teams that will force some teams to improve their delivery excellence, yet won't get in the way of teams who already have good delivery excellence (yet potentially non-standard though innovative development methods).

Introduction

Most agile methodologies come with a built-in form of information exchange at the team level:

daily standup meeting, retrospectives, sprint demos, pair programing, planning meetings that include the entire team, retrospectives, and code reviews. eXtreme Programming, for example, contains the most extreme form of team viral information sharing--pair programming, which has developers working with each other and improving all their development craftsmanship on a daily basis (including soft skills), but sometimes people can't get along with each other. Other forms are lightweight and helpful, but reduced efficiency--code reviews, for example, help people become better OO programmers (a part of craftsmanship), but the practice increases work-in-progress, creates an additional future integration point (integration of design ideas), discourages code experimentation (might not make it past the code review), and doesn't help people learn how to use their software tools such as the IDE more efficiently.

Although most Agile methodologies come with good information exchange at the team level, finding how to direct efforts across the organizational is rarely discussed. (Scrum has Scrum of Scrum and Meta Scrum. Others practices? Please use the blog comments to add more knowledge if you see something missing.) Here are strategies that I've seen implemented to a good effect across an organization and creates a minimum bar of delivery excellence across all the teams, forcing some teams to discover better ways to deliver code, yet doesn't disrupt teams that already are excellent at delivering code.

Working Agreements

The best working agreements have fewer than eight items and are visible in the team's working area. Here is Fred.

Off camera is the rest of his team. Although this working agreement was developed by the team, organizational standards were incorporated without being "too" proscriptive. This way, the team takes ownership of their "implementation" and use their own tools/processes to accomplish the working agreement.

Off camera is the rest of his team. Although this working agreement was developed by the team, organizational standards were incorporated without being "too" proscriptive. This way, the team takes ownership of their "implementation" and use their own tools/processes to accomplish the working agreement.Team Working Agreement

Teams get a lot of value by having a written agreement on how we work together and handle day to day issues. This agreement often puts into writing the answers to the "typical" questions that non-self directed teams recieved by asking their project manager, "hey, this happened, what should I do?" This working agreement is often referred to by the following names: Team Working Agreement, General Working Agreement, or Daily Working Agreement. The editorial comments in parenthesis aren't explicitly written down on the working agreement, but are understood by the team.

Example:

Team Working Agreement

- Automated tests are added for all discovered production issues before the code is written

- Standups always include a dial-in (for teams that have remote team members/PO)

- solving production fires is top priority

- stop what your doing to address failing automated unit tests

- the state of all automated tests is visible in the team area

- all system tests, unit tests, UI tests are executed by CI

- all unit tests are executed at least daily

- comprehensive test passes are executed before release to live (all automated + manual)

- all code changes are developed using TDD (legacy code and new code)

- QA starts writing system tests on day one of sprint

- everyone is a tester

Organizational Standard

Setting some core standards across all teams about what few items must be in Team Working Agreement works well. Common subset of the above that I've seen in practice are:

- all system tests, unit tests, UI tests are executed by CI

- all unit tests are executed at least daily

- visible burndown chart

- Scrum is practiced (this is usually implied)

Story Definition of Done

This is the team's agreement on what "done" means when they say, "this story is done." All the steps to the story being done must be met to get "credited" with the story being finished so the team can accumulate the story's estimate in Story Points/Units into the team's Velocity. When the team starts a new Definition of Done, the SM needs to be vigilant until the team has got it. If the team can't follow it, then we look for "why not" in the Retrospective and find some way to handle the impediment (fix some problems, adjust definition of done) This definition of done is important for maintaining the team's standard of potentially shippable at the end of each sprint. The editorial in the parenthesis isn't typically added to definitions of done but understood by the team.

Example:

Story Definition of Done

- All public methods are unit tested (except for machine generated code that isn't changed by human hands, or DataValue objects that are "identity--I=I" getter/setters)

- Story functionality is tested with an automated system/UI test (also called acceptance tests, functional tests, ...)

- Manual tests are executed

- Rally is updated (Rally is an electronic story tracking tool)

Organizational Standard

- all public methods are unit tested

- stories have automated system tests (also called acceptance tests, ...)

Because a sprint is time boxed, the sprint is finished regardless of the "pass/fail" of the items in the Sprint Definition of Done, but the retrospective should be used to identify the root causes and create actions to solve problems.

Example:

Sprint Definition of Done

- Each sprint, the code coverage increases

- All automated tests are green at the end of the sprint

- All manual tests for the stories are executed

Organizational Core Standard

- code coverage increases each sprint

- design issues go down each sprint

- automated test count (system and unit) goes up each sprint

Other Working Agreements

The following working agreements may also be useful to teams. The art is to find the fewest agreements so we aren't buried by paperwork and yet have the ones that are necessary because problems happen when we don't have them: Task Definition of Done, Release Definition of Done (contains activities to be done before the software goes live, such as performance testing, security testing, or other things that for some reason, there are impediments to getting them done during typical sprints.)

Build Monitors

These devices show the "headlines" of the projects quality status. Anyone can "click" for details. This team modified the continues integration system's CSS to get the clear and simple style they wanted. (Unfortunately, most CI environments have overly complicated dashboards.) The monitor the left has the biz tier (unit tests) and web services tier (system tests) for the SAME app. The build was split into logical levels. The first level compiles, then runs unit tests for the biz tier, and then runs system tests for the web services tier. The monitor on the right is the system tests that test the UI. These three levels are common. It's not hard to imagine adding a SQL level would be useful for a team doing work with stored procedures. The success of one level cascades to the next level. A failure at level one (can't compile) stops it all.

These devices show the "headlines" of the projects quality status. Anyone can "click" for details. This team modified the continues integration system's CSS to get the clear and simple style they wanted. (Unfortunately, most CI environments have overly complicated dashboards.) The monitor the left has the biz tier (unit tests) and web services tier (system tests) for the SAME app. The build was split into logical levels. The first level compiles, then runs unit tests for the biz tier, and then runs system tests for the web services tier. The monitor on the right is the system tests that test the UI. These three levels are common. It's not hard to imagine adding a SQL level would be useful for a team doing work with stored procedures. The success of one level cascades to the next level. A failure at level one (can't compile) stops it all.Book Groups

Books a great way to get information across many people in an organization. Just buying everyone in your department a book is a weak play (I still haven't read "The HP Way" which was given to me by my manager when I started work at HP). Books are great learning tools if they are actually used. I don't recommend buying people books unless they ask for them or it comes with a plan reading and using the knowledge. Book groups are groups that get together and discuss reading and using the knowledge (or actually demonstrating the knowledge). To encourage the Cross Pollination of Information, find someone to start a cross organizational book group that meets each week over lunch (companies often buy lunch and the books, and if people are meeting about the books). Encourage the group to develop activities to put the knowledge to work: get members to sign up to teach or demonstrate or lead discussion for each chapter of the book. DO-CODE-ON-PROJECTOR with a mob watching is my personal favorite tactic. Books I recommend are: Martin Fowler's Refactoring, Diana Larson's Agile Retrospectives. Teams I've been a part of have done this with books on Refactoring, GOF Design Patterns, eXreme Programing Explained, and Java Concurrency.

Conclusion

Delivering to all your teams core standards for their working agreements creates quality bars that teams aim for and hurdle over. If the organization focuses on the desired outcome and allows the teams to develop how to reach those outcomes, the teams will find solutions that work best for their personal and project makeup, and they'll become vested in the implementation. Adding in collaborative cross-organizational sharing and learning events such as book groups that are driven by employees, will give the employees a process for driving their own development and collaborating/learning with other team members.

Troubleshooting

Here is a troubleshooting guide that covers some common problems that may occur implementing the above activities.

- Team finished sprint with zero velocity.

- Maybe their working agreements were too hard. Inspect and adapt for next sprint. Maybe they need to embed a coach for a sprint.

- Team releases with bugs.

- Not enough testing. Zero bug releases are expected.

- A team's CI environment isn't visible

- Are their tests failing? What are they hiding?

- Team refuses to try new practices/process for even a sprint.

- Too much release pressure? Team embedded in their old ways? Is the company culture so risk adverse that any failure has political implications?

References:

XP practices http://en.wikipedia.org/wiki/Extreme_programming_practices

There's an impediment that I often encounter with TDD training: developers don't want to write unit tests because they're too comfortable with the develop/fixbugs (without test automation)->release->bugreport->develop/fixbugs->release cycle.

ReplyDeleteThey don't know this, but they are forcing their organizations to endure this because:

they don't know any other way,

they're used to it, and

the organization is used to living with this.

When I tell them that most of the time, the teams I've been a part of as a developer or ScrumMaster have zero bug release, and the rest of the time, have one or two problems we can correct within hours to a day (because we have fully automated testing), I don't think they believe me. (I've done this on multiple projects. Java and .Net since 1999-2006)

A great organizational play would be to make this less acceptable (or less rewarding). So I ask the Internet, what kinds of policy can be put in place that to change the culture that releasing code with bugs as expected?